Max for Live: Extreme Time Stretching with Spectral Stretch

In this post, I'm giving insights into the creation of the Spectral Stretch Max for Live Device.

There are many ways to achieve audio time stretching without transposition. Some time-based methods build on Pierre Schaefer's Phonogène. Another approach consists in processing the sound in spectral domain, using a phase vocoder. In this case, the audio samples are converted to spectral data through a Fast Fourier Transform (FFT). Then, even if we focus on extreme time stretching, the details of the phase vocoder implementation have important consequences on the sound quality and the tool's flexibility for live usage.

Before introducing the Max for Live device Spectral Stretch, let's have a look at a selection of four possible algorithms:

- Paulstretch

- Max Live Phase Vocoder

- Interpolation between recorded spectra

- Stochastic Re-synthesis from a recorded sonogram

Paulstretch

Paul's Extreme Sound Stretch, also known as Paulstretch, is an algorithm designed by Paul Nasca for extreme time stretching. You might have heard one application already: to this date, the video Justin Bieber 800% Slower received more than 3 million views. The algorithm has been included in Audacity since version 2.2.

Paul uses a traditional Phase Vocoder technique, with potentially very large analysis windows. Here is a picture where he explains how that works:

With Paulstretch, you can specify a very large analysis window. In the Audacity interface, the user gives a "time resolution" in seconds; this number, translated to a number of samples, is rounded to a power of two used as FFT size.

Max Live Phase Vocoder

Richard Dudas and Cort Lippe published in 2006 an excellent Max phase vocoder tutorial, which allows for live extreme time stretching. In their implementation, sound is simultaneously analyzed and re-synthesized. You can't get FFT window sizes as large as in Paulstretch: the [fft~] object in Max allows a maximum FFT size of 4096 samples at this date. In the second part of the phase vocoder tutorial, the authors introduce a small randomization of phases, which you can adjust to produce spectral variations similar to the Paulstretch effect. Another level of blurring can be introduced with randomization of the playback position. This is often used when granular analysis/synthesis is used for time stretching and freeze effects.

Interpolation between recorded spectra

In the two previous examples, interpolation from an analysis window to the next is realized with randomization. You can also interpolate linearly between successive values before the re-synthezing the sound. In that case, you make the analysis live, store the successive frame of spectral data, and perform the interpolation live when playing the sound back. That allows a live modification of the playback speed, but the resulting sound can be perceived as more static, less interesting, than with some randomization. More details are available in the article A Tutorial on Spectral Sound Processing with Max/MSP and Jitter.

Stochastic Re-synthesis from a recorded sonogram

As in the previous paragraph, we keep here the storage of raw spectral data. At the time of re-synthesis, we perform a weighted stochastic selection of data between one frame and the next. You could call this a stochastic interpolation. You can adjust the parameters live, while keeping the sound color more alive than with linear interpolation. That works even with a null playback speed: I used this approach in the Spectral Freeze Pro Max for Live device. Another advantage is included: you can adjust the time frame used for the re-synthesis. That's the Blur parameter in Spectral Stretch. For more details, have a look at this 2008 video about extreme time stretching with Max.

Extreme Time Stretching in Max for Live

In the Spectral Stretch Max for Live device, I use the fourth algorithm presented above: stochastic re-synthesis.

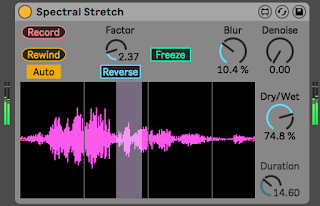

You can adjust the sound with the following controls:

- Record: starts recording the track's sound input in the device.

- Rewind: places the playback head at the beginning of the sound.

- Auto: when on, Rewind is triggered automatically with Record.

- Stretch Factor: how much longer the sound will be... adjust from 1 to 1000.

- Reverse: reverse playback direction.

- Freeze: stop the playback head in place: as long as it is on, the playback speed is 0.

- Blur: adjust the width of the sound from which the stochastic re-synthesis is made.

- Denoise: spectral noise gate, to change the sound texture.

- Dry/Wet Mix: adjust the proportion between the direct sound from the track input and the sound from the device.

- Duration: adjust the length of the recorded sound. When sound duration is modified, the sound is reset.

- Click & Drag on the waveform: click the waveform to reposition the playback head. Click and drag to travel through the sound wave.

In this picture, the parameters available for MIDI mapping are highlighted:

Extreme Time Stretching with Spectral Stretch is an advanced audio processing technique used to dramatically slow down sound without significantly altering its pitch. It works by analyzing the audio spectrum and manipulating frequency components over time, rather than simply stretching the waveform. This process is similar to carefully expanding a soundscape, much like arranging large rugs in a spacious room to preserve balance and detail. Spectral Stretch allows subtle textures, harmonics, and hidden layers of sound to become more audible. It is widely used in sound design, ambient music, and experimental audio production. By stretching sounds to extreme lengths, producers can create evolving drones and atmospheric effects. The technique relies heavily on FFT (Fast Fourier Transform) analysis. While powerful, it requires careful adjustment to avoid artifacts. Overall, it opens creative possibilities beyond traditional time-stretching methods.

ReplyDelete